One of my favorite presentations to give is called, “Changing Your Habits to Improve the Performance of Your T-SQL.” After giving this presentation in Austin, TX this past January, I had one of my students contact me. He saw in one of my demonstration how bad cursors can perform. He wanted to see if his stored procedure could be written using set theory.

One of my favorite presentations to give is called, “Changing Your Habits to Improve the Performance of Your T-SQL.” After giving this presentation in Austin, TX this past January, I had one of my students contact me. He saw in one of my demonstration how bad cursors can perform. He wanted to see if his stored procedure could be written using set theory.

Scenario

He has an application that can only accept 3 data types (NVARCHAR, NUMERIC, and DATETIME), and as all normal database schemas do, his database has more than 3 data types. He needed to identify which column had data types that could not be used and determine which of the 3 data types could be used for that column. The actual conversion was handled by another process, so the information to do the conversion was stored in a table.

The Cursor Approach

His approach was to use a cursor to cycle through all the columns in the provided table, analyze each column, determine the new data type, and store the information in a table variable. After the cursor was completed, the data in the table variable was written to a permanent table for the next process to use.

This approach isn’t necessarily bad. If you are only running it infrequently and you needed to write this stored procedure quickly, then it’s fine. But if this type of stored procedure needs to be run frequently, then it should be rewritten.

The Reason For the Rewrite

Every SQL statement used in a stored procedure can get it’s own execution plan, depending on whether or not it was parameterized. Parameterized queries that are identically written (including case, spaces, and line breaks), can reuse an execution plan. Those that are not, will receive their own execution. Each unique execution plan is stored in the cache. When there are multiple similar execution plans stored in the Cache, it’s called “Cache Bloat.”

| Note: This does not apply to databases with the “optimize for ad hoc workloads” setting turned on, but that would be a different blog post. |

What does this have to do with Cursors? Each time the Cursor loops (and this includes While loops), each SELECT statement is executed independently and receives its own execution plan. If the query is parameterized, then execution plans can be reused. You can see this by using Extended Events, or Profiler.

| Note: If you try this out, don’t do it in production. You’ll be adding load to your SQL Server. |

The Cursor

Below is a similar query to what I was sent. This particular solution looks very complicated. There are table variables, one holding the good data types, and one that will hold the needed information for the conversion. There are also several variables to be used in the cursor. Finally the table variable with the needed information, is written to the external table.

USE DemoProgramming

GO

SET NOCOUNT ON;

/* These are the parameters that would be used in the stored procedure.*/

DECLARE

@schema AS NVARCHAR(200) = 'dbo'

,@tableName AS NVARCHAR(200) = 'SalesHeader'

/* table variable will approved data types.*/

DECLARE @legalDataTypes TABLE

(

Data_Type NVARCHAR(500)

);

INSERT INTO @legalDataTypes

(Data_Type)

VALUES

('nvarchar'),

('numeric'),

('datetime');

/* use information_schema.columns to discover information about column types of input table */

DECLARE @ColumnInformation TABLE

(

ColumnName NVARCHAR(100) NOT NULL

PRIMARY KEY

,ColumnDataType NVARCHAR(20) NOT NULL

,ColumnLength NVARCHAR(10)

,NumericPrecision INT

,NumericScale INT

,OrdinalPosition INT

);

INSERT INTO @ColumnInformation

(ColumnName

,ColumnDataType

,ColumnLength

,NumericPrecision

,NumericScale

,OrdinalPosition

)

SELECT

COLUMN_NAME

,DATA_TYPE

,CHARACTER_MAXIMUM_LENGTH

,NUMERIC_PRECISION

,NUMERIC_SCALE

,ORDINAL_POSITION

FROM

INFORMATION_SCHEMA.COLUMNS

WHERE

TABLE_NAME = @tableName

AND TABLE_SCHEMA = @schema;

/* The table will be populated iteratively and eventually inserted into the meta data table */

DECLARE @resultSet TABLE

(

ColumnName NVARCHAR(100)

,ColumnDataType NVARCHAR(20)

,ColumnLength NVARCHAR(10)

,Position INT

);

/* These will be set each loop */

DECLARE @columnLength AS NVARCHAR(10)

DECLARE @newDataType AS NVARCHAR(20);

/*setup cursor*/

DECLARE @columnName NVARCHAR(100);

DECLARE @dataType NVARCHAR(100);

DECLARE @characterMaximumLength NVARCHAR(10);

DECLARE @ordinalPosition INT;

DECLARE @numericPrecision INT;

DECLARE @numericScale INT;

DECLARE allColumnsCursor CURSOR

FOR

SELECT

ColumnName

,ColumnDataType

,ColumnLength

,NumericPrecision

,NumericScale

,OrdinalPosition

FROM

@ColumnInformation;

OPEN allColumnsCursor;

FETCH NEXT FROM allColumnsCursor INTO @columnName, @dataType, @characterMaximumLength, @numericPrecision, @numericScale, @ordinalPosition;

WHILE @@FETCH_STATUS = 0

BEGIN

IF @dataType NOT IN (SELECT

Data_Type

FROM

@legalDataTypes)

/* illegal data types*/

BEGIN

IF @dataType IN ('varchar', 'char', 'nchar')

BEGIN

SET @newDataType = N'nvarchar';

SET @columnLength = CAST(@characterMaximumLength AS NVARCHAR(10));

END;

ELSE

IF @dataType IN ('decimal', 'float', 'real', 'money', 'smallmoney')

BEGIN

SET @newDataType = N'numeric';

SET @columnLength = '(19,8)';

END;

ELSE

IF @dataType IN ('bigint', 'smallint', 'tinyint', 'binary', 'varbinary', 'int')

BEGIN

SET @newDataType = N'numeric';

SET @columnLength = '(19,0)';

END;

ELSE

IF @dataType IN ('bit')

BEGIN

SET @newDataType = N'numeric';

SET @columnLength = '(1,0)';

END;

ELSE

IF @dataType IN ('smalldatetime', 'date', 'time', 'datetimeoffset', 'datetime2', 'timestamp')

BEGIN

SET @newDataType = N'datetime';

SET @columnLength = NULL;

END;

ELSE

BEGIN

DECLARE @ret_string VARCHAR(255);

EXEC sys.xp_sprintf @ret_string OUTPUT, '@@columnName = %s has unrecoginzed @dataType = %s', @columnName, @dataType;

RAISERROR(@ret_string,16,1);

RETURN;

END;

END;

ELSE

BEGIN

/* legal data types, don't change datatype but capture correct columnLength */

-- VALUES ('nvarchar'),('numeric'),('datetime');

SET @newDataType = @dataType;

IF @dataType = 'nvarchar'

BEGIN

SET @columnLength = CAST(@characterMaximumLength AS NVARCHAR(10));

END;

ELSE

IF @dataType = 'numeric'

BEGIN

SET @columnLength = '(' + CAST(@numericPrecision AS NVARCHAR(10)) + ',' + CAST(@numericScale AS NVARCHAR(10)) + ')';

END;

ELSE

BEGIN

SET @columnLength = NULL;

END;

END;

INSERT INTO @resultSet

(ColumnName

,ColumnDataType

,ColumnLength

,Position

)

VALUES

(@columnName

,@newDataType

,@columnLength

,@ordinalPosition

);

FETCH NEXT FROM allColumnsCursor INTO @columnName, @dataType, @characterMaximumLength, @ordinalPosition, @numericPrecision, @numericScale;

END;

CLOSE allColumnsCursor;

DEALLOCATE allColumnsCursor;

/* populate two meta data tables*/

INSERT INTO dbo.DataTypeConversion_Cursor

(TableName

,ColumnName

,ColumnDataType

,ColumnLength

,OrdinalPosition

)

SELECT

@tableName

,ColumnName

,ColumnDataType

,ColumnLength

,Position

FROM

@resultSet;

The New Solution

The new solution consists of a permanent table that contains the information, per data type to do the conversion. That allows a join between the conversion information and the metadata found in the system SQL view, “INFORMATION_SCHEMA.COLUMNS”. After the join, the new information can be directly inserted into the permanent table for the next process to consume.

/*Create this table one time*/

CREATE TABLE dbo.DataTypeConversion

(

OldDataType NVARCHAR(20)

,NewDataType NVARCHAR(20)

,columnLength NVARCHAR(20)

)

/*Values for the Data Types that will be converted.*/

INSERT INTO dbo.DataTypeConversion

(OldDataType, NewDataType, columnLength)

VALUES

('decimal', N'numeric', '(19,8)')

,('Float', N'numeric', '(19,8)')

,('real', N'numeric', '(19,8)')

,('money', N'numeric', '(19,8)')

,('smallmoney', N'numeric', '(19,8)')

,('varchar', N'nvarchar', '-1')

,('char', N'nvarchar', '-1')

,('nchar', N'nvarchar', '-1')

,('bigint', N'numeric', '(19,0)')

,('smallint', N'numeric', '(19,0)')

,('tinyint', N'numeric', '(19,0)')

,('binary', N'numeric', '(19,0)')

,('varbinary', N'numeric', '(19,0)')

,('int', N'numeric', '(19,0)')

,('bit', N'numeric', '(1,0)')

,('smalldatetime', N'datetime', NULL)

,('date', N'datetime', NULL)

,('time', N'datetime', NULL)

,('datetimeoffset', N'datetime', NULL)

,('datetime2', N'datetime', NULL)

,('timestamp', N'datetime', NULL)

,('numeric', N'numeric', NULL)

,('nvarchar', N'nvarchar', NULL)

/*paramters that would be used with the Stored Procedure.*/

DECLARE

@Tablename AS VARCHAR(100) = 'SalesHeader'

,@schema AS VARCHAR(20) = 'dbo'

INSERT DataTypeConversion_SetTheory

(TableName

,ColumnName

,ColumnDataType

,ColumnLength

,OrdinalPosition

)

SELECT

c.TABLE_NAME

,c.COLUMN_NAME

,d.NewDataType

/*Conversion for data types*/

,CASE WHEN d.NewDataType = 'nvarchar' THEN CAST(c.CHARACTER_MAXIMUM_LENGTH AS NVARCHAR(10))

WHEN d.OldDataType = 'numeic' THEN '(' + CAST(c.NUMERIC_PRECISION AS NVARCHAR(10)) + ',' + CAST(c.NUMERIC_SCALE AS NVARCHAR(10)) + ')'

ELSE d.columnLength

END AS NewColumnLength

,c.ORDINAL_POSITION

FROM

INFORMATION_SCHEMA.COLUMNS AS c

JOIN dbo.DataTypeConversion AS d ON c.DATA_TYPE = d.OldDataType

WHERE

c.TABLE_NAME = @Tablename

AND c.TABLE_SCHEMA = @schema;

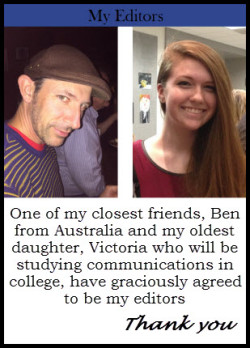

Giving thanks

I want to give thanks to my student, Mark Lai and the company he works for, allowing me to write about this Cursor Transformation. As the geek I am, I thoroughly enjoyed the challenge of thinking outside of the box to rewrite the stored procedure.

![AdobeStock_52521593_thumb[2] AdobeStock_52521593_thumb[2]](https://i0.wp.com/mickeystuewe.com/wp-content/uploads/2015/12/AdobeStock_52521593_thumb2_thumb.jpg?resize=244%2C164)

![11-29-2015 5-37-20 PM_thumb[1] 11-29-2015 5-37-20 PM_thumb[1]](https://i2.wp.com/mickeystuewe.com/wp-content/uploads/2015/12/11-29-2015-5-37-20-PM_thumb1_thumb.jpg?resize=480%2C66)

![11-28-2015 5-00-57 PM_thumb[1] 11-28-2015 5-00-57 PM_thumb[1]](https://i1.wp.com/mickeystuewe.com/wp-content/uploads/2015/12/11-28-2015-5-00-57-PM_thumb1_thumb.jpg?resize=480%2C298)

![11-29-2015 8-05-39 PM_thumb[1] 11-29-2015 8-05-39 PM_thumb[1]](https://i0.wp.com/mickeystuewe.com/wp-content/uploads/2015/12/11-29-2015-8-05-39-PM_thumb1_thumb.jpg?resize=480%2C241)

On Tuesday, July 7th, 2015, I had the privilege of speaking for the

On Tuesday, July 7th, 2015, I had the privilege of speaking for the